SoC design: When is a network

- ICDesign

- 2023-09-23 23:01:20

In the not-so-distant past, system-on-chip (SoC) devices were relatively simple compared to today’s offerings. Early SoCs typically consisted of 10 to 20 intellectual property (IP) blocks, each generally composed of around 10,000 to 50,000 logic gates. Most of these IPs, including the processor and peripheral functions, were licensed from third-party vendors. Developers normally created only one or two IPs containing the “secret sauce” that differentiated their SoC from other competitive offerings.

A classic bus architecture approach was used when interconnects requiring a limited number of IPs to communicate with each other were relatively simple. Designers responsible for very few initiator IPs—which orchestrated the data transaction requests combined with multiple target IPs that responded to those requests—used this method.

As the number and size of IPs increased and they assumed the role of initiators, it became necessary to adopt more sophisticated interconnect architectures in the form of crossbar switches. A crossbar switch was advantageous since it allowed any initiator IP to talk to any target IP. However, as the capacity and complexity of SoCs continued to increase, crossbar switches posed challenges such as routing congestion, excessive use of silicon die area, and power consumption.

Enter the NoC

Today’s SoCs can contain hundreds of IP blocks, each with more logic gates than earlier generations. Typically, third-party vendors provide most of these IPs. In addition to variations in data bus width, control bus functionality, and operating frequency, each IP may employ one of the many interface protocols—OCP, APB, AHB, AXI, STBus, and DTL—that have been defined and adopted by SoC designers.

The solution to the challenge of increasing numbers of IPs in today’s SoCs is to employ network-on-chip (NoC). Each IP is equipped with a socket function that interfaces with the NoC. In the case of a write access triggered by an initiator, the socket serializes and packetizes the data being generated by that IP and transports it to its intended target. In turn, the socket associated with the target will extract the data to write from the packet and transform it into the destination protocol. There can be multiple in-flight packets at the same time.

This NoC or that NoC?

NoCs can be implemented with a wide variety of topologies, including irregular trees, regular rings, and mesh or torus architectures. Higher-dimensional architectures may also be employed for certain designs, including three-dimensional cubes and four- and five-dimensional hypercubes.

In most cases, a single NoC does not suffice, and designers often break down their interconnects into a combination of dozens of NoCs. While a non-cache-coherent NoC satisfies the requirements of most peripherals, IPs such as processors and accelerators typically benefit from the capabilities of a cache-coherent NoC. Also, some portions of the SoC, for instance, an artificial intelligence/machine learning (AI/ML) processing node array, may benefit from a meshed NoC architecture. As a result, an SoC can easily feature a combination of multiple NoC topologies to achieve optimal results.

When is a NoC not enough?

In addition to the sockets used to interface the IPs to the NoC, the network will also include the necessary switches and buffers. Furthermore, as the process nodes used to implement SoCs continue to shrink, the resistance of the tracks increases, leading to resistor–capacitor delays associated with signals as they travel through the device. As a result, signals can no longer traverse all or a portion of the device in a single clock cycle. Addressing this problem requires the insertion of pipeline registers in the signal pathways. However, optimizing their number and location is a difficult task.

Figure 1 Here is a broad view of a typical NoC configuration and implementation flow. Source: Arteris IP

The development of an SoC is twofold—the front-end (upstream) and the back-end (downstream) design (see Figure 1). The front-end design focuses on the logical and functional aspects, such as which IPs to use, how to connect them, and how to architect the network to ensure expected throughput and latency. The back-end design, on the other hand, deals with the physical aspects of the chip, including where to place the IPs in relation to each other and whether the timing can be met. It is only at this stage that designers will know how many and where to place pipeline registers, bringing them all the way back to the front-end activities.

Performing a full-up physical layout is a time-intensive process. Anything that cannot be addressed in the back end has to be reworked in the front end, which restarts the time-consuming physical layout process (see Figure 2). Being able to proactively insert the pipeline registers early in the flow would be extremely valuable for the convergence of the implementation tasks and the overall project turn-around time.

Figure 2 Long-timing paths can span across the entire SoC. Source: Arteris IP

This puts a lot of pressure on the front-end design team who do not necessarily have all the required information to make an educated decision. Inserting too few pipeline registers would result in the SoC failing to meet timing in the physical layout. This results in a tendency to overengineer the problem by inserting too many pipeline stages. Although the timing may be met, having too many stages increases latency and power consumption.

The result of all this is that simply having a raw NoC in the form of sockets, switches, buffers, and pipeline registers is not enough. In order to verify performance and achieve timing, it’s necessary for the NoC’s implementation tools to understand the environment in which they will serve.

Physically aware NoC

In a “physically aware” NoC IP, front-end designers can use early IP placement information from the physical layout team to predict implementation ability and resolve any potential timing issues. As part of this, the NoC tools can automatically define the ideal number and location of pipeline registers in the RTL and generate the associated implementation constraints.

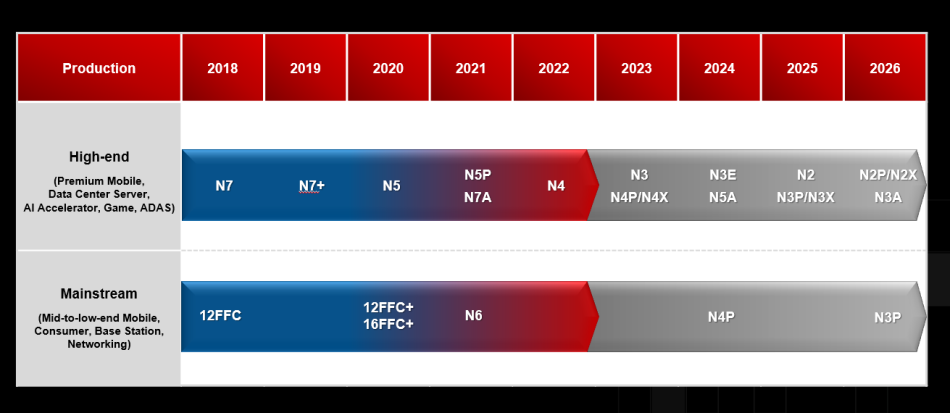

Today’s NoC technologies go far beyond what many designers might expect. For example, the NoC generator leverages geometry and delay information specific to the process technology node. This information allows us to further refine the timing estimations and determine the pipeline register requirements and locations.

By refining the front-end design in this way and providing more detailed information to the back-end tools, the overall process and the first physical implementation in particular can be dramatically improved. This can lead to a significant reduction in the number of back-end to front-end iterations, thereby reducing risk, increasing productivity, lowering costs, and speeding time to market and time to money.

Related Content

SoC Interconnect: Don’t DIY!Network on chip eases IP connectWhat is the future for Network-on-Chip?The network-on-chip interconnect is the SoCArteris spins packet-based network-on-chip IPSoC design: When is a network由Voice of the EngineerICDesignColumn releasethank you for your recognition of Voice of the Engineer and for our original works As well as the favor of the article, you are very welcome to share it on your personal website or circle of friends, but please indicate the source of the article when reprinting it.“SoC design: When is a network”