Desktop DC source shows real precision where it’s needed

- Analog

- 2023-09-23 21:19:15

I’ve always been intrigued with high-precision instruments, as it usually represents the best of engineering design, craftsmanship, elegance, and even artistry. One of earliest and still best examples that I recall was when I saw the weigh-scale design by the late, legendary Jim Williams, published nearly 50 years ago in EDN. His piece, “This 30-ppm scale proves that analog designs aren’t dead yet,” details how he designed and built a portable, AC-powered scale for nutritional research using standard components and with extraordinary requirements: extreme resolution of 0.01 pound out of 300.00 pounds, accuracy to 30 parts per million (ppm), and no need for calibration during its lifetime.

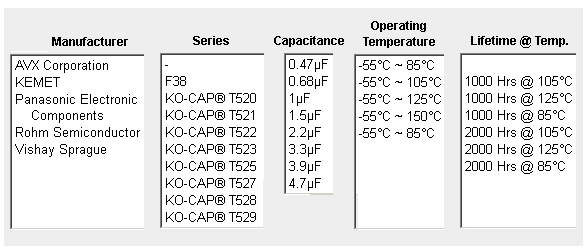

Jim’s project was a non-production, one-off unit and while its schematic (Figure 1) was obviously important, tells only part of the story, there are many more lessons in his description.

Figure 1 This schematic from Jim Williams’ 1976 EDN article on design of a precision scale teaches many lessons, but there’s much more than just the schematic to understand. Source: Jim Williams

To meet his objectives, he identified every source of error or drift and then methodically minimized or eliminated each one via three techniques: using better, more-accurate, more-stable components; employing circuit topologies which self-cancelled some errors; and providing additional “insurance” via physical EMI and thermal barriers. In this circuit, the front-end needed to extract a miniscule 600-nV signal (least significant digit) from a 5-V DC level—a very tall order.

I spoke to Jim a few years before his untimely passing, after he had written hundreds of other articles (see “A Biography of Jim Williams”), and he vividly remembered that design and the article as the event which made him realize he could be a designer, builder, and expositor of truly unique precision, mostly analog circuits.

Of course, it’s one thing to handcraft a single high-performance unit, but it’s a very different thing to build precision into a moderate production-volume instrument. Yet companies have been doing this for decades, as typified by—but certainly not limited to—Keysight Technologies (formerly known as Agilent and prior to that, Hewlett-Packard) and many others, too many to cite here.

Evidence of this is seen in the latest generation of optical test and measurement instruments, designed to capture and count single photons. That’s certainly a mark of extreme precision because individual photons generally don’t have much energy, don’t like to be assessed or captured, and self-destruct when you look at them.

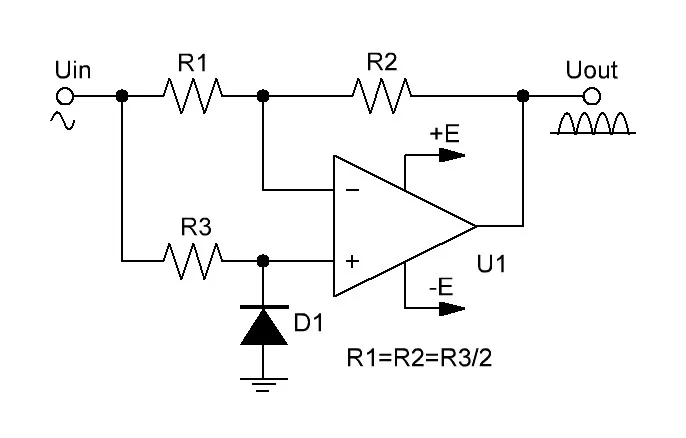

I recently came across another instrument that takes a simple function to an extreme level of precision: the DC205 Precision Voltage Source from Stanford Research Systems. This desktop unit is much more than just a power supply, as it provides a low-noise, high-resolution output which is often used as a precision bias source or threshold in laboratory-science experiments (Figure 2).

Figure 2 This unassuming desktop box represents an impressively high level of precision and stability in an adjustable voltage source. Source: Stanford Research Systems

Its bipolar, four-quadrant output delivers up to 100 V with 1-μV resolution and up to 50 mA of current. It offers true 6-digit resolution with 1 ppm/°C stability (24 hours) and 0.0025 % accuracy (one year).

Two other features caught my attention: it uses a linear power supply (yes, they are still important in specialty applications) to minimize output noise, presumably only for the voltage-output block but not for the entire instrument. There’s also the inclusion of an DB-9 RS-232 connector in addition to its USB and fiber optic interfaces. I haven’t seen an RS-232 interface in quite a while, but I presume they had a good reason to include it.

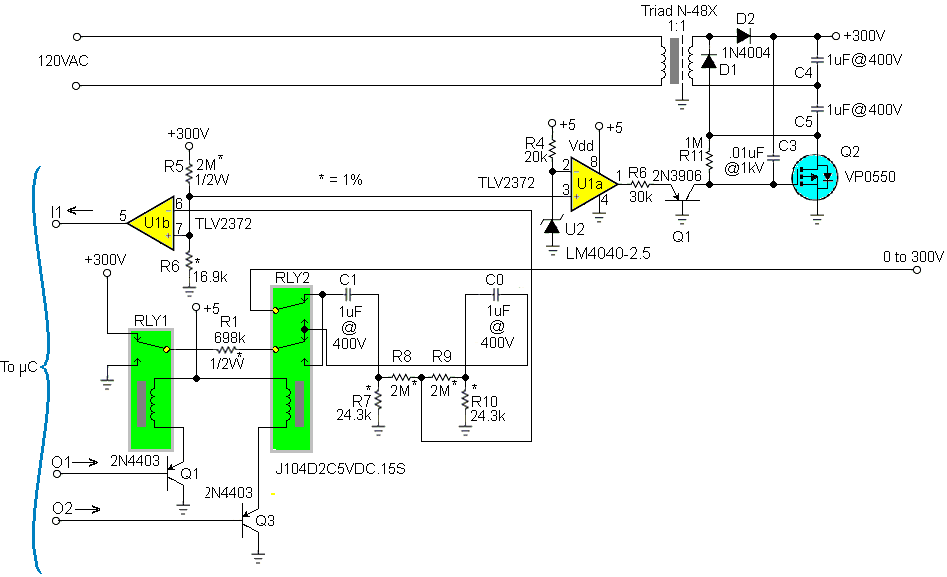

The block diagram in the User’s Manual reveals relatively little, except to indicate the unit has three core elements which combine to deliver the instrument’s performance: a low-noise, stable voltage reference; a high-resolution digital-to-analog converter; and a set of low-noise, low-distortion amplifiers (Figure 3).

Figure 3 As with Jim Williams’ scale, the core functions of the SR205 look simple, and may be so, but it is also the unrevealed details of the implementation that make the difference in achieving the desired performance. Source: Stanford Research Systems

I certainly would like to know more of the design and build details that squeeze such performance out of otherwise standard-sounding blocks.

As this unit targets lab experiments in physics, chemistry, and biology disciplines, it also includes a feature that conventional voltage sources would not include: a scanning (ramping) capability. This triggerable voltage-scanning feature gives user control overstart and stop voltages, scan speed, and scan function, with scan speeds settable from 100ms to 10,000s, and the scan function can either be a ramp or a triangle wave. Further, for operating in the 100-V “danger zone”, the user must plug a jumper into the rear panel to deliberately and consciously allow operation in the region.

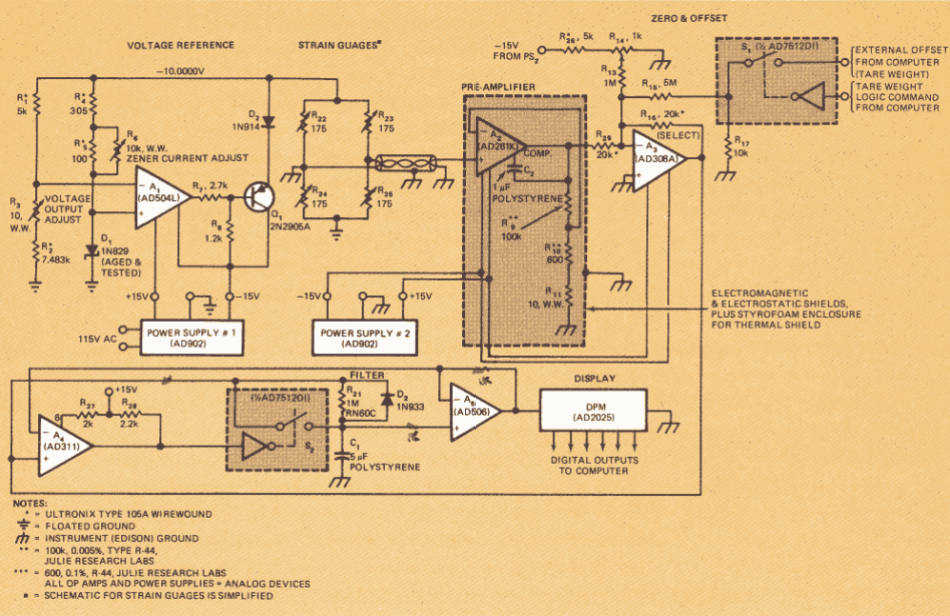

In addition to the DB-9 RS-232 interface supporting legacy I/O and an optical link for state-of-the-art I/O, I noticed another interesting feature called in the well-written, readable, crisp, and clear user’s manual: how to change the AC-line voltage setting. Some instruments I have seen use a slide switch and some use plug-in jumpers (don’t lose them), but this instrument uses a thumbwheel rotating selector as shown in Figure 4.

Figure 4 Even a high-end instrument must deal with different nominal power-line voltages, and this rotary switch in the unit makes changing the setting straightforward and resettable. Source: Stanford Research Systems

In short, this is a very impressive standard-production instrument with precision specifications and performance, with what seems like a very reasonable base price of around $2300.

I think about it this way: In the real world of sensors, signal conditioning, and overall analog accuracy achieving stable, accurate performance to 1% is doable with reasonable effort; getting to 0.1% is much harder and reaching 0.01% is a real challenge. Yet both custom and production-instrumentation designers have mastered the art and skill of going far beyond those limits.

It’s similar to when I first saw a list of fundamental physical constants such as the mass or moment of an electron, and which had been measured (not defined) to seven or eight significant figures with an error only that last digit. I felt compelled to do further investigation to understand how they reached that level of precision and confidence, and how they credibly assessed their sources of error and uncertainty.

What’s the tightest measurement or signal-source accuracy and precision you have had to create? How did you confirm the desired level of performance was actually achieved—if it was?

Bill Schweber is an EE who has written three textbooks, hundreds of technical articles, opinion columns, and product features.

Related Content

Power needs go beyond just plain voltage and currentLearning to like high-voltage op-amp ICsJim Williams’ contributions to EDNThe Quest for Quiet: Measuring 2nV/√Hz Noise and 120dB Supply Rejection in Linear Regulators, Part 2Transistor ∆VBE-based oscillator measures absolute temperatureDesktop DC source shows real precision where it’s needed由Voice of the EngineerAnalogColumn releasethank you for your recognition of Voice of the Engineer and for our original works As well as the favor of the article, you are very welcome to share it on your personal website or circle of friends, but please indicate the source of the article when reprinting it.“Desktop DC source shows real precision where it’s needed”