Aspired European Nvidia aims DPUs at embedded AI

- ICDesign

- 2023-09-23 23:01:23

The “Technological Maturation and Demonstration of Embedded Artificial Intelligence Solutions” call for projects under the “France Relance 2030 – Future Investments” initiative has selected the IP-CUBE project led by Kalray for accelerating AI and edge computing designs. The IP-CUBE project aims to establish the foundations of a French semiconductor ecosystem for edge computing and embedded AI designs.

These AI solutions, deployed in embedded systems as well as local data centers, also known as edge data centers, aim to process data closer to where it’s generated. For that, embedded AI and edge computing designs require new types of processors and semiconductor technologies to process and accelerate AI algorithms and address new technological challenges relating to high performance, low power consumption, low latency, and robust security.

The €36.7 million IP-CUBE project is led by Kalray, and its Dolomites data processing unit (DPU) processor is at the heart of this design initiative. DPU is a new type of low-power, high-performance programmable processor capable of processing data on the fly while catering to multiple applications in parallel. Other participants in the IP-CUBE project include network-on-chip IP supplier Arteris, security IP supplier Secure-IC, and low-power RISC-V component supplier Thales.

“In the current geopolitical context, the semiconductor industry has become essential, both in terms of production tools and technological know-how for designing processors,” said Eric Baissus, CEO of Kalray. “France and Europe need production plants, but they also need companies capable of designing the processors that will be manufactured in these plants.”

Kalray claims it’s the only company in France and Europe to offer DPUs. Its DPU processors and acceleration cards are based on the company’s massively parallel processor array (MPPA) architecture. The French suppliers of DPU processors is also part of other collaborative projects such as the European Processor Initiative (EPI).

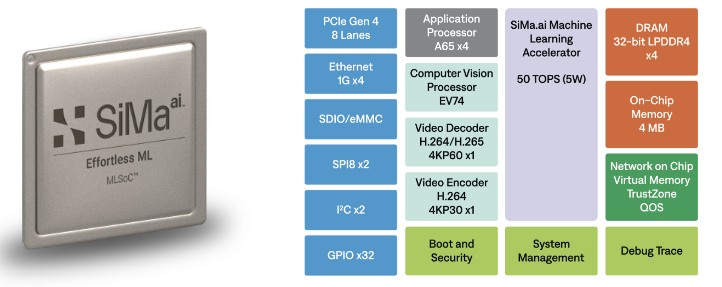

Arteris, another participant in the IP-CUBE project, has recently licensed its high-speed network-on-chip (NoC) interface IP to Axelera AI, the Eindhoven, Netherlands-based supplier of AI solutions at the edge. The Dutch company’s Metis AI processing unit (AIPU) is equipped with four homogeneous AI cores built for complete neural network inference acceleration. Each AI core is self-sufficient and can execute all standard neural network layers without external interactions.

The four cores—integrated into an SoC—encompass a RISC-V controller, PCIe interface, LPDDR4X controller, and a security arrangement connected via a high-speed NoC interface. Here, Arteris FlexWay interconnect IP uses area-optimized interconnect components to address a smaller class of SoC.

The above developments highlight some of the AI-related advancements in Europe and a broad realization that a strategic and open semiconductor ecosystem should be built around AI applications. Here, smaller SoC designs targeted at edge computing and embedded AI will be an important part of this technology undertaking.

Related Content

SoC Interconnect: Don’t DIY!What is the future for Network-on-Chip?Multiprocessing #5: Dataplane Processor UnitsNvidia DPU brings hardware-based zero trust securityNvidia Presents the DPU, a New Type of Data Center ProcessorAspired European Nvidia aims DPUs at embedded AI由Voice of the EngineerICDesignColumn releasethank you for your recognition of Voice of the Engineer and for our original works As well as the favor of the article, you are very welcome to share it on your personal website or circle of friends, but please indicate the source of the article when reprinting it.“Aspired European Nvidia aims DPUs at embedded AI”